Thursday, January 15, 2009

Tuesday, August 21, 2007

AnatomicRig 0

The user is now required to supply both the mesh and the (bound) skeleton (automatic skinning is still an option to be discussed later). Detailed studies of existing character types (such as primates, canines, felines, deers, cows etc) are to be made with results to be entered in a database. The purpose is to find correlations between known character types (eg. how manys ways cows are similar to horses), and to derive common parameters for these categories of characters (within or across different categories). With this knowledge, the input supplied will be analysed and matched against the database to determine which category of characters it belonged to (or categories, given a centaur or mermaid). Thereafter, the input would then be updated (adding more bones or mesh, altering positions, etc) with regards to the common parameters identified earlier to add realism and improve anatomical correctness of the model. Users can also specify within their character values for other attibutes that is identified with the particular category (eg weight, mass, agility etc), which would alter the model based again on the database created.

Tuesday, August 14, 2007

AutoRig 1.5

How do i generate a skeleton and embed it into a given mesh? Procedural adding of joints and orienting them is really a matter of scripting but the problem here is to analyse the mesh itself. For decent deformation the skeleton must be placed appropriately and well away from the mesh boundary. Suppose i went through all vertices of the mesh to find extreme points, i would still be unable to find positions for elbows hips n knees because these are not extreme points in the anatomy, and the method would probably take too long should a smoothed mesh be given. Default poses of the given meshes would also likely compromise accuracy. The simplest (and by no means easy) method i can think of now is to decompose the mesh into parts and analysing their volumes, then connecting the joints for every part. There are 2 papers on skeleton extraction from National Taiwan university that rather interest me, but currently im trying to figure out the paper from Grégoire Aujay1 Franck Hétroy1 Francis Lazarus2 Christine Depraz1 on Harmonic skeleton for realistic animation, which shows a fast, automatic skeleton generation that can adhere to anatomic characteristics.

Sunday, August 5, 2007

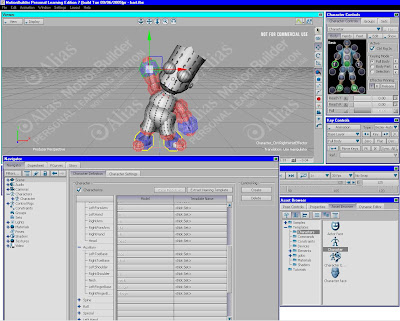

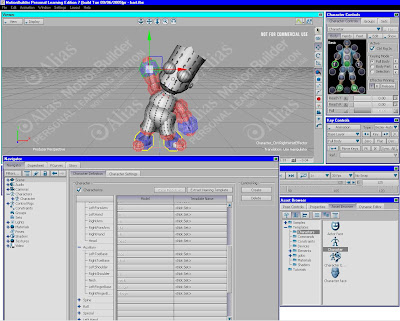

AutoRig 1

Primitive rig -- no colours were used to differentiate between right and left

Polygons are used as iconic controls (instead of nurbs curves as most commonly seen).

Details were left out: footrolls and toes, fingers, jaws, etc.

IK/FK switching options was not implemented. The arms was rigged with only FK controls (experts online says arms are better left FK for precision).

Been slow in implementing the primitive rig. There are many ways to go about doing it and i pretty much ended up trying out everyone of them. Had tried using constraints first, and then moved on to setting driven keys (and adding extra attributes then controlling them with slider bars) and then resorting to the connection editor and finally the expression editor. I have to say the expression editor should be the best way to go about it because the executed steps are clearly shown (hypergraphs can get rather confusing because of the way the nodes are being displayed). Still, there were bugs everywhere. In the end the primitive rig is a combination of all the methods stated above. I had also written a mel script for a simple user interface to select the controls but it seems my copy of maya could not source the script no matter which directory i placed it in. Next up would be procedural bone placement (to be done within the next week or 2) and then procedural skinning (notice my model did not go through proper skin weights painting and areas under the armpits and shoulders deformed unnaturally). So far the main area of concern is still the skin weights and my new concern is the rigging of the high-range-of-motions areas (shoulders and thighs). Forums and websites i have gone to have done things ranging from adding influence objects (muscles?) to actually constructing the entire shoulder girdle (5-bone structure http://www.animationartist.com/2003/08_aug/tutorials/rigging_anatomy.htm#, whereas im using a 2-bone girdle representing only the clavicle and the shoulder) to allow for more realistic influences over the shoulder area. Apparently, Maya does not have event handlers (such as onMouseDown commands in Actionscript).

To be done: procedural bone placement and skin weights painting, shoulder and thigh rigging research(1 week).

Edit: found out why i couldnt source my script.

Monday, July 23, 2007

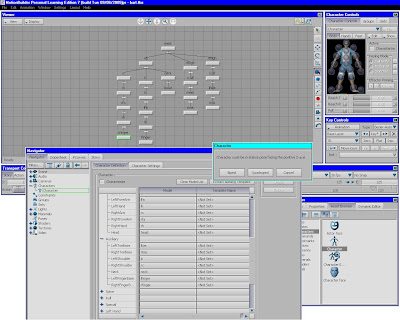

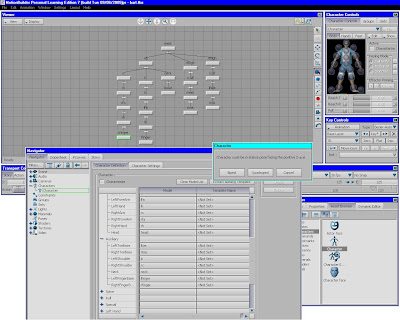

AutoRig 0

Back from 2 weeks of reservist and another 2 weeks of settling personal agendas.

Been exploring Maya tools for sometime. Read up on steps in character setups particularly in the setup of joints, IK/FK switching, smooth vs rigid binding, IK splines, various constraints, setting driven keys, 'iconic' rigs (Maya Character Animation by Jae-Jin Choi). Facial rigs seem out of the question for the time being. Had spent sometime on soft/rigid bodies and fields (turbulance, uniform, ...) though i'm unsure of their neccesity.

My main concern at this stage has to do with skin binding. Given user inputs of models and geometries, we are supposed to create a skeleton, bind it and rig controls for the model. Character setup is a highly individual process for each different model (i think jovan popovic said something about this in 1 of his papers), though the same rig might be reused for similar models. Using both rigid and smooth binding methods in Maya seem unable to allow user the precise control of vertex weighting (such as painting skin weights in smooth bind, or using deformation lattices in rigid bind, as well as creating joint flexors and manipulating them precisely to give the best animation possible; these are, in my opinion, processes better done manually than automatically). We might be able to create sliding bars for users to adjust the drop-off rate and max influences for each vertex in smooth binding. I guess i would need guidance too on the inclusion of setting driven keys in automatic rigging because it is, according to what i've learnt, a matter of user preference also.

I have also come across few ways of rigging a character but i still find the iconic representation to be the most intuitive of all. I'm trying to rig my own character but apart from IK in the knees

and elbows, and splines in the spine, along with constraints applied to the joints, i am unable yet to replicate the full body rig as achieved in Motion Builder. My idea of autorigging is rather similar to Motion Builder's because it allows users to input preferred settings. The difference would be that Motion Builder requires an input skeleton, whereas we could implement a system that generates it. Perhaps it could be modelled after Jovan Popovic's idea of using a given skeleton. Then again, the weights applied to each vertex could potentially be done by machine learning.

Been exploring Maya tools for sometime. Read up on steps in character setups particularly in the setup of joints, IK/FK switching, smooth vs rigid binding, IK splines, various constraints, setting driven keys, 'iconic' rigs (Maya Character Animation by Jae-Jin Choi). Facial rigs seem out of the question for the time being. Had spent sometime on soft/rigid bodies and fields (turbulance, uniform, ...) though i'm unsure of their neccesity.

My main concern at this stage has to do with skin binding. Given user inputs of models and geometries, we are supposed to create a skeleton, bind it and rig controls for the model. Character setup is a highly individual process for each different model (i think jovan popovic said something about this in 1 of his papers), though the same rig might be reused for similar models. Using both rigid and smooth binding methods in Maya seem unable to allow user the precise control of vertex weighting (such as painting skin weights in smooth bind, or using deformation lattices in rigid bind, as well as creating joint flexors and manipulating them precisely to give the best animation possible; these are, in my opinion, processes better done manually than automatically). We might be able to create sliding bars for users to adjust the drop-off rate and max influences for each vertex in smooth binding. I guess i would need guidance too on the inclusion of setting driven keys in automatic rigging because it is, according to what i've learnt, a matter of user preference also.

I have also come across few ways of rigging a character but i still find the iconic representation to be the most intuitive of all. I'm trying to rig my own character but apart from IK in the knees

and elbows, and splines in the spine, along with constraints applied to the joints, i am unable yet to replicate the full body rig as achieved in Motion Builder. My idea of autorigging is rather similar to Motion Builder's because it allows users to input preferred settings. The difference would be that Motion Builder requires an input skeleton, whereas we could implement a system that generates it. Perhaps it could be modelled after Jovan Popovic's idea of using a given skeleton. Then again, the weights applied to each vertex could potentially be done by machine learning.

Tuesday, June 5, 2007

Knowledge-driven character rigging

This is what has been agreed on the project so far.

This project is about creating controls for user-supplied meshes dynamically. This process ("rigging") covers various aspects of character setup, such as creating animation controls for the skeleton (which is to be created by the computer with inputs from the user regarding joint designations), character extensions, soft/rigid bodies and secondary motions (in particular determining various keys to control them; set-driven keys as mentioned can be used to control multiple attributes, exactly how is to be determined later). While a working end-product would be expected, the larger aim of this project would be to develop a practical framework/methodology which can be applied or modified for other similarly based projects. The process by which we can achieve knowledge-driven character rigging is therefore more important.

Because this project is oriented towards creating a tool for beginners (some of the papers ive come across are similar in this aspect), it is expected that the rigs created be generic enough to be applied to other meshes with some variations made. The process should be highly modularised as well. This makes it easy to spot and reduce errors and unwanted influences. There should also be a learning mechanism in place that can detect "dangerous" parameters from user inputs and come up with a best learned solution. This is to prevent users from committing errors (especially since it is aimed at helping novices). Lastly an intuitive HCI is to be developed. Current offerings in the market have highly unintuitive HCI due to their extremely complex functionalities. For now the focus would be on bipeds.

I found that MotionBuilder from Alias is rather similar to what we wish to achieve, but it requires the skeleton as an input from the user,which it then rigs according to user preferences.

User input parameters

Rig is created with user inputs

There is existing research on generating skeletons generic enough to fit different meshes. They only work on bipeds, however, and the mesh must have a certain general shape or the rigging would fail. DreamWorks discussed how difficult it is to dynamically "shift" a rigged model from a biped to a quadraped with their character Puss-in-Boots.

RELATED WORKS

Automatic Rigging and Animation of 3D Characters by Ilya Baran and Jovan Popovic

Morphable Model of Quadraped Skeletons for Animating 3D Animals by

Lionel Reveret, Laurent Favreau, Christine Depraz, Marie-Paule Cani

The Chronicles of Narnia: The Lion, The Crowds and Rhythm and Hues from Rhythm and Hues Studios

Art-Directed Technology: Anatomy of a Shrek2 Sequence from DreamWorks 2004

Synthesizing realistic spine motion using traditional rig controllers by Jabbar Raisani

Physically Based Rigging for Deformable Characters by

Steve Capell, Matthew Burkhart, Brian Curless, Tom Duchamp, and Zoran Popovi´c

Interactive Skeleton Extraction for 3D Animation using Geodesic Distances by

Takuya Oda Yuichi Itoh Wataru Nakai Katsuhiro Nomura Yoshifumi Kitamura Fumio Kishino

Misc

Style-Based Inverse Kinematics by Keith Grochow1 Steven L. Martin1 Aaron Hertzmann2 Zoran Popovi´c1

Rigging a Horse and Rider: Simulating The Predictable and Repetitive Movement Of The Rider

by Jennifer Lynn Kuhnel

Grove: A Production-Optimised Foliage Generator for The Lord of The Rings: The Two Towers"

by Matt Aitken Martin Preston

This project is about creating controls for user-supplied meshes dynamically. This process ("rigging") covers various aspects of character setup, such as creating animation controls for the skeleton (which is to be created by the computer with inputs from the user regarding joint designations), character extensions, soft/rigid bodies and secondary motions (in particular determining various keys to control them; set-driven keys as mentioned can be used to control multiple attributes, exactly how is to be determined later). While a working end-product would be expected, the larger aim of this project would be to develop a practical framework/methodology which can be applied or modified for other similarly based projects. The process by which we can achieve knowledge-driven character rigging is therefore more important.

Because this project is oriented towards creating a tool for beginners (some of the papers ive come across are similar in this aspect), it is expected that the rigs created be generic enough to be applied to other meshes with some variations made. The process should be highly modularised as well. This makes it easy to spot and reduce errors and unwanted influences. There should also be a learning mechanism in place that can detect "dangerous" parameters from user inputs and come up with a best learned solution. This is to prevent users from committing errors (especially since it is aimed at helping novices). Lastly an intuitive HCI is to be developed. Current offerings in the market have highly unintuitive HCI due to their extremely complex functionalities. For now the focus would be on bipeds.

I found that MotionBuilder from Alias is rather similar to what we wish to achieve, but it requires the skeleton as an input from the user,which it then rigs according to user preferences.

User input parameters

Rig is created with user inputs

There is existing research on generating skeletons generic enough to fit different meshes. They only work on bipeds, however, and the mesh must have a certain general shape or the rigging would fail. DreamWorks discussed how difficult it is to dynamically "shift" a rigged model from a biped to a quadraped with their character Puss-in-Boots.

RELATED WORKS

Automatic Rigging and Animation of 3D Characters by Ilya Baran and Jovan Popovic

Morphable Model of Quadraped Skeletons for Animating 3D Animals by

Lionel Reveret, Laurent Favreau, Christine Depraz, Marie-Paule Cani

The Chronicles of Narnia: The Lion, The Crowds and Rhythm and Hues from Rhythm and Hues Studios

Art-Directed Technology: Anatomy of a Shrek2 Sequence from DreamWorks 2004

Synthesizing realistic spine motion using traditional rig controllers by Jabbar Raisani

Physically Based Rigging for Deformable Characters by

Steve Capell, Matthew Burkhart, Brian Curless, Tom Duchamp, and Zoran Popovi´c

Interactive Skeleton Extraction for 3D Animation using Geodesic Distances by

Takuya Oda Yuichi Itoh Wataru Nakai Katsuhiro Nomura Yoshifumi Kitamura Fumio Kishino

Misc

Style-Based Inverse Kinematics by Keith Grochow1 Steven L. Martin1 Aaron Hertzmann2 Zoran Popovi´c1

Rigging a Horse and Rider: Simulating The Predictable and Repetitive Movement Of The Rider

by Jennifer Lynn Kuhnel

Grove: A Production-Optimised Foliage Generator for The Lord of The Rings: The Two Towers"

by Matt Aitken Martin Preston

Sunday, April 22, 2007

Reflections: Farting in the mirror

The term 'user experience' comes tagged with a multitude of definitions, all of which cannot fully explain the term but all are correct nonetheless, because experience can refer to functionality, or aesthetics, or perceived pleassure or any combination or all of them. This shows how varied experience can be for every individual. It is important to understand the needs of each individual user, yet it is impossible to design for only one person. We have to design products for the mass market, yet to design for everyone is to design for no one. Such is the contradiction that exists within the discipline of UX that forces us to keep an open mind over strategies to employ.

Current market practises are increasingly geared towards the individual consumer because that is the vital point of differentiation for their product. Increasingly, marketing strategies are placing more importance on branding and user experience creation than on the product itself. An example would be the Ipod. Functionality-wise, it is no match for the Creative Zen, but Ipod sales far exceed that of Zen's because of the marketing strategies that it took: heavy advertising and creation of perceived user pleasures. Even though functionality accounts for part of the overall experience, Ipod's focus on visual qualities proved to be successful. Looking at Apple's success it is not difficult to understand why user experience is gaining popularity as an academic discipline.

The greatest gain from this course is the identification of human behaviours and traits of emotions. For example the 4-pleasures framework could accurately describe most circumstances that people would feel pleasure in. Pleasures of need and appreciation are also personally valued, as is discovering the 3 attributes of emotions. Understanding human needs and psychology was something that would benefit me for the rest of my life.

Because of my background from the school of computing, i realised that creating functionalities alone does not really count towards creating an 'experience'. In my course of study i've come across various chances to develop small scale softwares loaded with functionalities implemented to the best of my knowledge. These softwares that i've created, though functional, does little to create unique experiences for users; they work, but you won't feel a rush of pleasure using them. They are just like any other similar utility software you might find anywhere, and there's no differentiation. That's why in my final project my team mates and i decided to try and create an experience that truly evokes emotions (all 3 attributes of it hopefully) and appeals not only to our visual faculties, but also to our cognitive faculties. Truth to be told, we had several projects already done and, in fact, we could have handed those up if we had chosen to go for functionalities. But this is not a CS course, and functionalities are not the biggest concern here.

Then again, UX is also about creating functional products (not just websites, but the priciples are the same) that makes an impact. Its no mean feat man. The LG Prada is a functions-loaded phone, but it is really more than just that, combining technology with aesthetics. Shit, it looks cool. Looks better than me. Its cool to use. Hell its cool to own it. The overall quality of experience interacting with this product, be it aesthetics or functions, is undeniably good. But which phone today isn't a pleasure to toy with? The age of user-centred strategies is upon us. Gone are the days when everyone owned the same damn pager (even then they made an effort to make the damn pager look trendy, who could forget the gold chains hanging about?). Catch on the wave or be washed away forever.

It is my opinion that this module has helped me realise the subtleties of designing for users. Consumers are not forced to buy products anymore. Their choice is their power. If we want to chase their skirts we have to let them feel the difference. Exactly how that can be done is rocket science. But, well, what i've learnt here is a first step.

Current market practises are increasingly geared towards the individual consumer because that is the vital point of differentiation for their product. Increasingly, marketing strategies are placing more importance on branding and user experience creation than on the product itself. An example would be the Ipod. Functionality-wise, it is no match for the Creative Zen, but Ipod sales far exceed that of Zen's because of the marketing strategies that it took: heavy advertising and creation of perceived user pleasures. Even though functionality accounts for part of the overall experience, Ipod's focus on visual qualities proved to be successful. Looking at Apple's success it is not difficult to understand why user experience is gaining popularity as an academic discipline.

The greatest gain from this course is the identification of human behaviours and traits of emotions. For example the 4-pleasures framework could accurately describe most circumstances that people would feel pleasure in. Pleasures of need and appreciation are also personally valued, as is discovering the 3 attributes of emotions. Understanding human needs and psychology was something that would benefit me for the rest of my life.

Because of my background from the school of computing, i realised that creating functionalities alone does not really count towards creating an 'experience'. In my course of study i've come across various chances to develop small scale softwares loaded with functionalities implemented to the best of my knowledge. These softwares that i've created, though functional, does little to create unique experiences for users; they work, but you won't feel a rush of pleasure using them. They are just like any other similar utility software you might find anywhere, and there's no differentiation. That's why in my final project my team mates and i decided to try and create an experience that truly evokes emotions (all 3 attributes of it hopefully) and appeals not only to our visual faculties, but also to our cognitive faculties. Truth to be told, we had several projects already done and, in fact, we could have handed those up if we had chosen to go for functionalities. But this is not a CS course, and functionalities are not the biggest concern here.

Then again, UX is also about creating functional products (not just websites, but the priciples are the same) that makes an impact. Its no mean feat man. The LG Prada is a functions-loaded phone, but it is really more than just that, combining technology with aesthetics. Shit, it looks cool. Looks better than me. Its cool to use. Hell its cool to own it. The overall quality of experience interacting with this product, be it aesthetics or functions, is undeniably good. But which phone today isn't a pleasure to toy with? The age of user-centred strategies is upon us. Gone are the days when everyone owned the same damn pager (even then they made an effort to make the damn pager look trendy, who could forget the gold chains hanging about?). Catch on the wave or be washed away forever.

It is my opinion that this module has helped me realise the subtleties of designing for users. Consumers are not forced to buy products anymore. Their choice is their power. If we want to chase their skirts we have to let them feel the difference. Exactly how that can be done is rocket science. But, well, what i've learnt here is a first step.

Subscribe to:

Posts (Atom)